15.1 Interpreting and Explaining

High-Stakes Applications

- healthcare

- Why does it say I’m at high risk for heart disease?

- Why did my insurance company deny my claim?

- criminal justice (e.g., ProPublica COMPAS))

- Why was this defendant denied bail?

- Why target this neighborhood for increased policing?

- finance

- Why was my loan application denied?

- Why was my transaction flagged as fraudulent?

- hiring

- Why was my job application rejected?

- Why was I not promoted?

Outline

- Explaining black-box models

- Feature importance (permutaton)

- Global explanations (partial dependence)

- Local explanations (SHAP, LIME)

- Interpretable models

- Decision rule lists

- Additive risk score models

Interpretability vs Explainability

- Interpretability: can we understand how the model works?

- Explainability: can we explain why the model made a particular prediction?

Explaining Black-Box Models

Black-box model: a model that is difficult to interpret (e.g., random forest, gradient boosting model, deep neural network)

Why explain?

- Debugging

- Feature Engineering

- Collaborating with domain experts

- Helping users understand when to trust a model

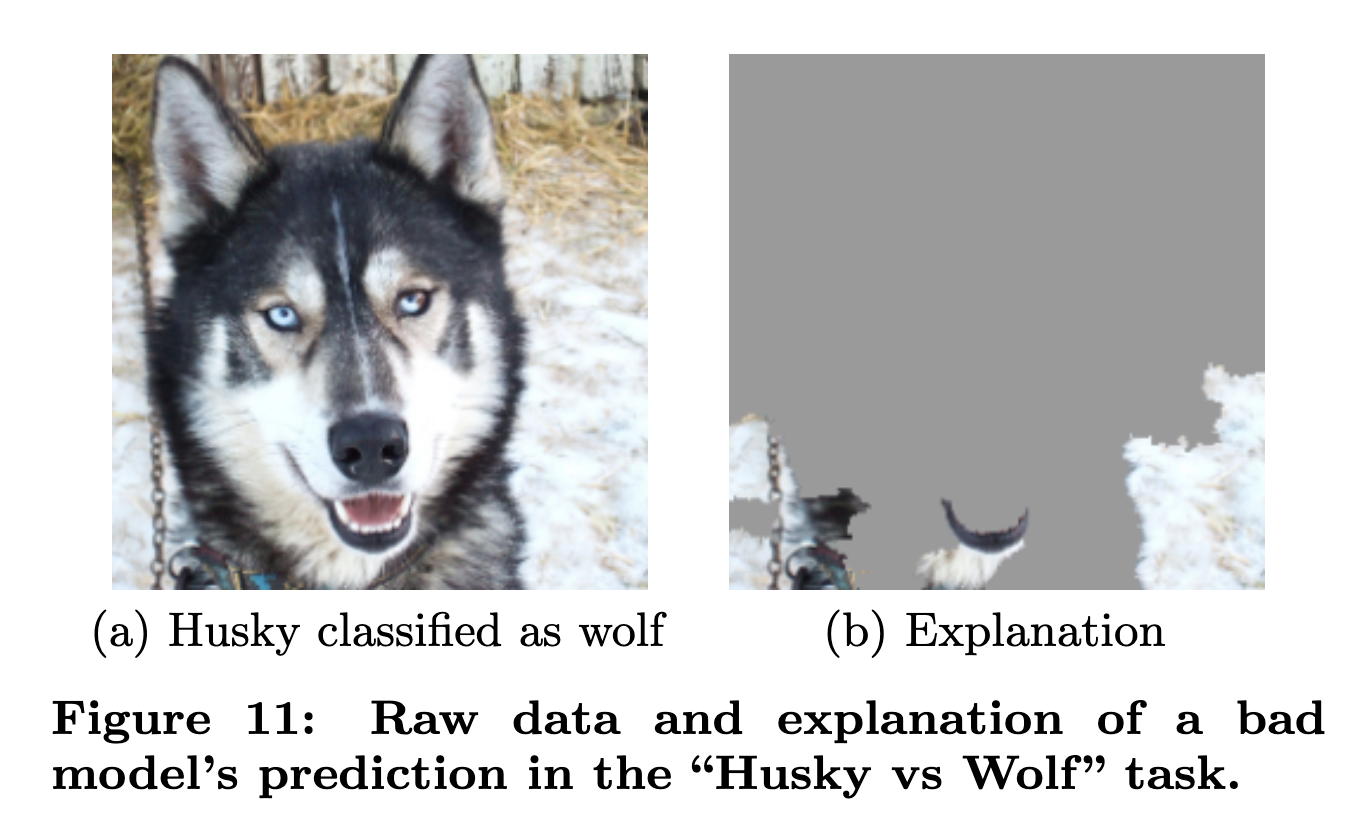

Image from: “Why Should I Trust You?”: Explaining the Predictions of Any Classifier | Abstract

Feature Importance

- We gave the model many features, but which ones did it use?

- Simple approach: remove each feature and see how much the model’s performance decreases

- but we’d have to retrain the model many times

- if two features are correlated, removing one might not affect the model’s performance

- Faster approach: permutation feature importance: scramble the values of each feature; how much does the model’s performance decrease?

- But doesn’t fix the problem of correlated features

Read more:

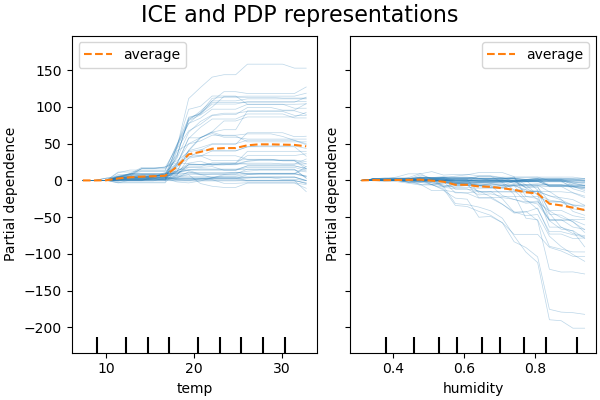

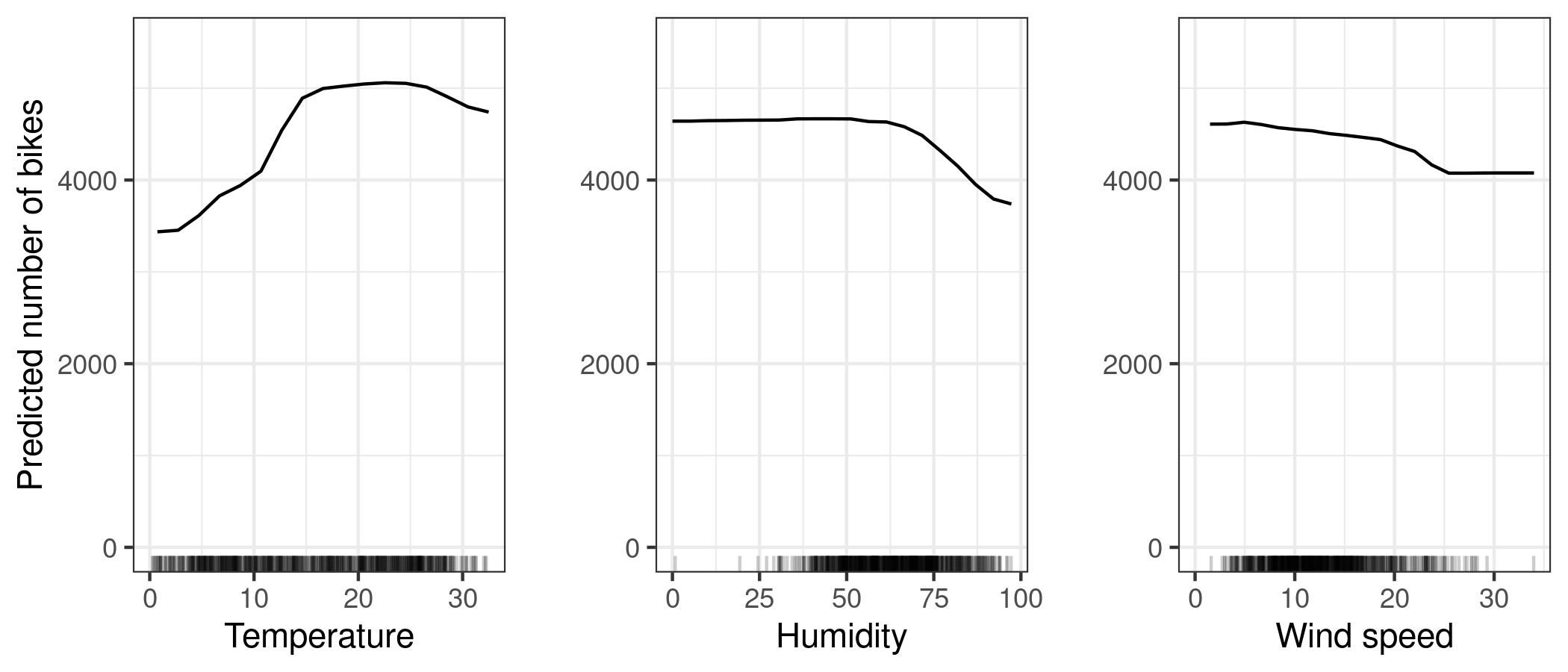

Partial Dependence Plots

- How does the model’s prediction change (in general) as we vary a feature?

- Individual conditional expectation (ICE) plot: compute the prediction for each row in the test set as we vary a feature

- Partial dependence plot: plot the average test-set prediction when we set a feature to a particular value; repeat for different values

Read more:

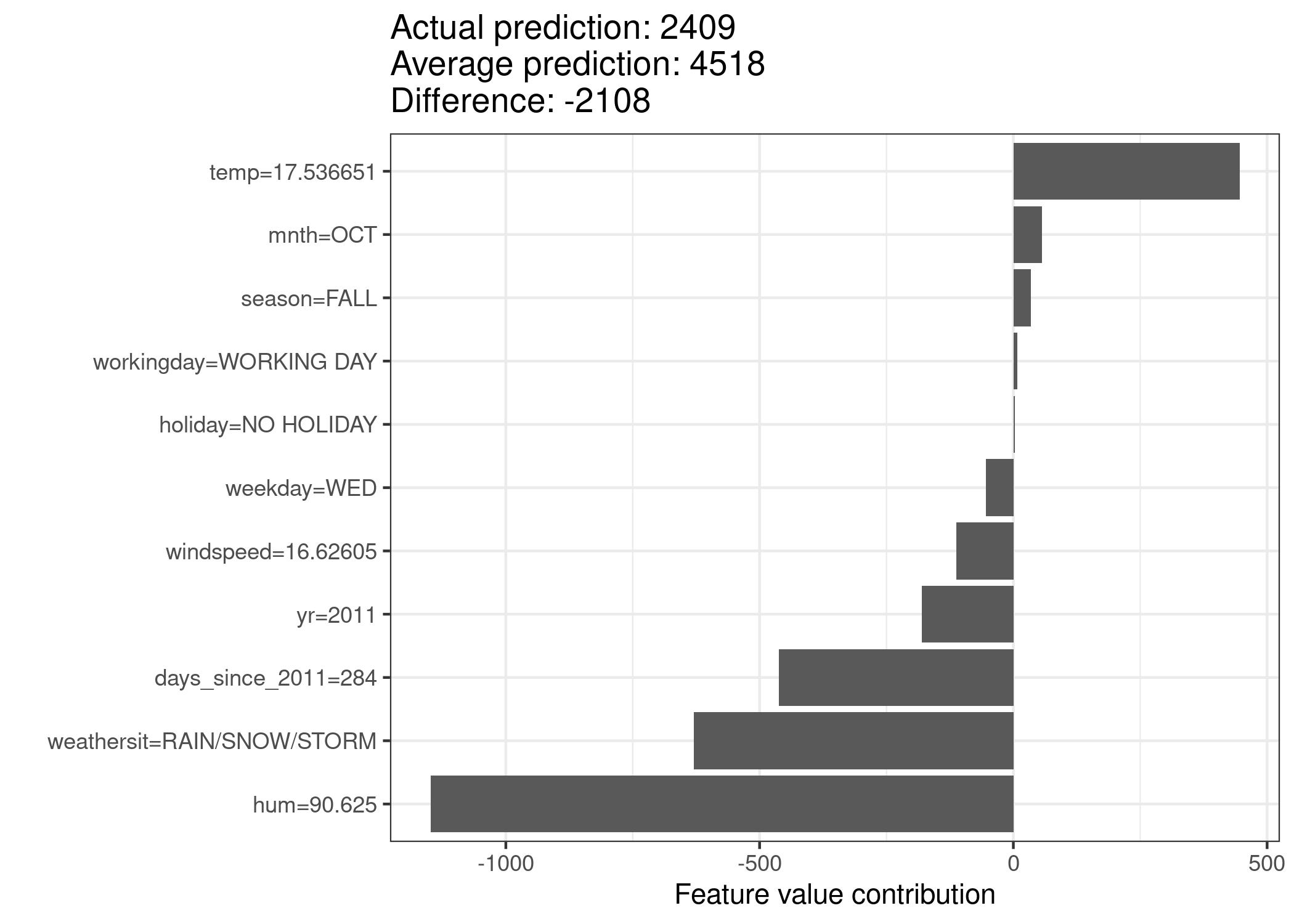

Local Explanations

- For a particular prediction, which features were most important?

- Might be different from the global feature importance

- SHAP: Shapley values from game theory

- intuition: How much would the prediction change if we removed each feature?

Predicting “Man of the Match” award in a soccer game:

Read more:

- Interpretable Machine Learning book chapter (source for the bikeshare example)

- Kaggle course (source for the soccer example): SHAP Values

- SHAP: A Unified Approach to Interpreting Model Predictions

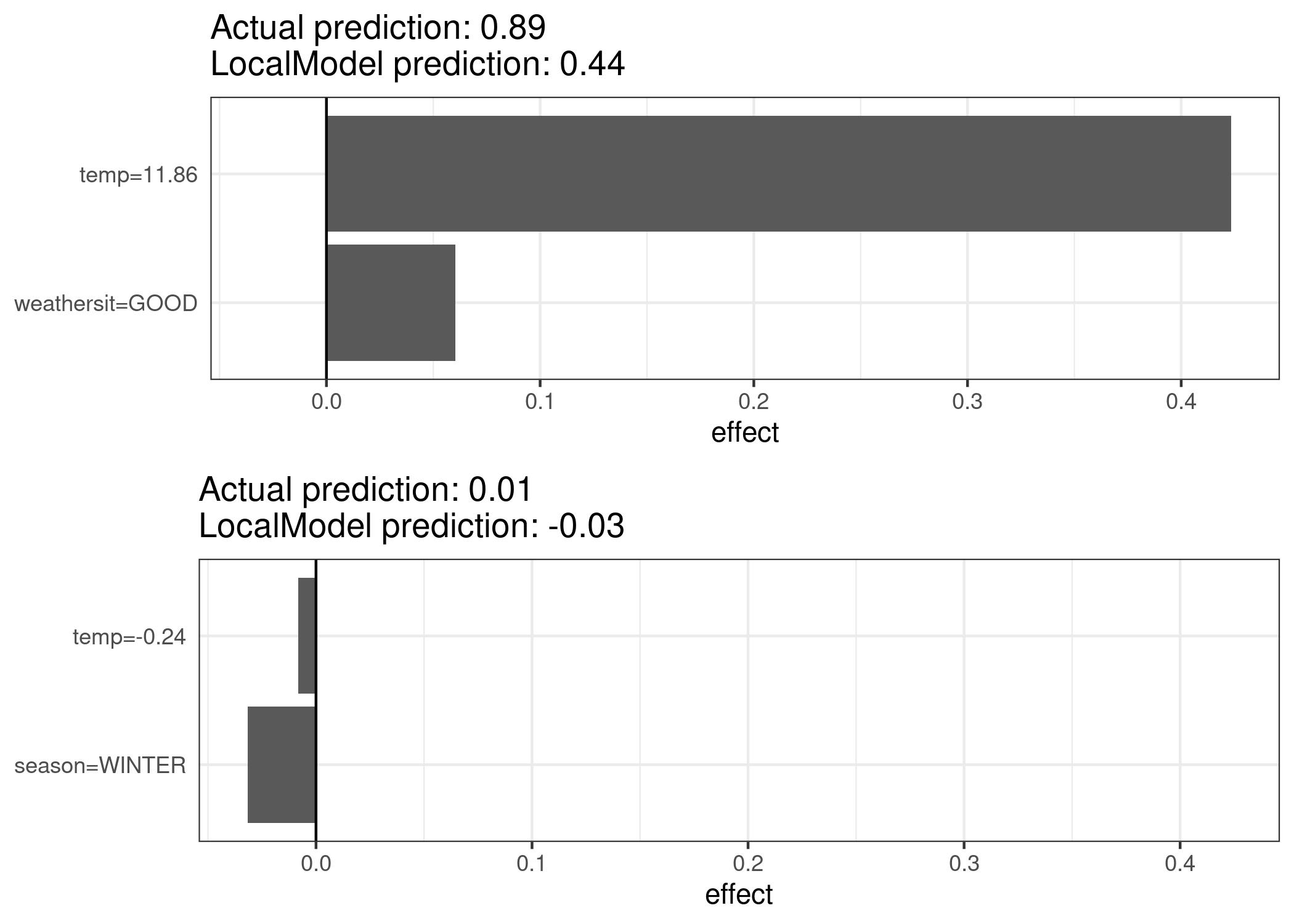

Local Explanations (LIME)

- Local Interpretable Model-Agnostic Explanations

- Train a simple model to approximate the black-box model’s predictions

Read more:

Interpretable Models

Instead of making a black-box model interpretable, we can use an interpretable model from the start.

Simple Models

- Linear regression, logistic regression (with a small number of features and few interactions)

- Lasso Regularization can help select a small number of features

- Shallow decision trees

- Naive Bayes

- k-Nearest Neighbors

Read more:

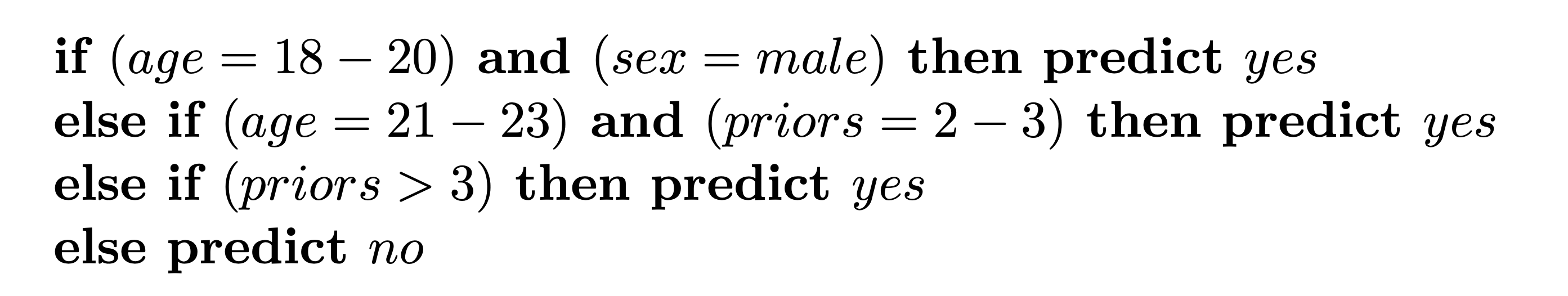

Decision Rule Lists

- A list of rules that can be used to make a prediction

- Example: CORELS (Certifiably Optimal RulE ListS)

Read more:

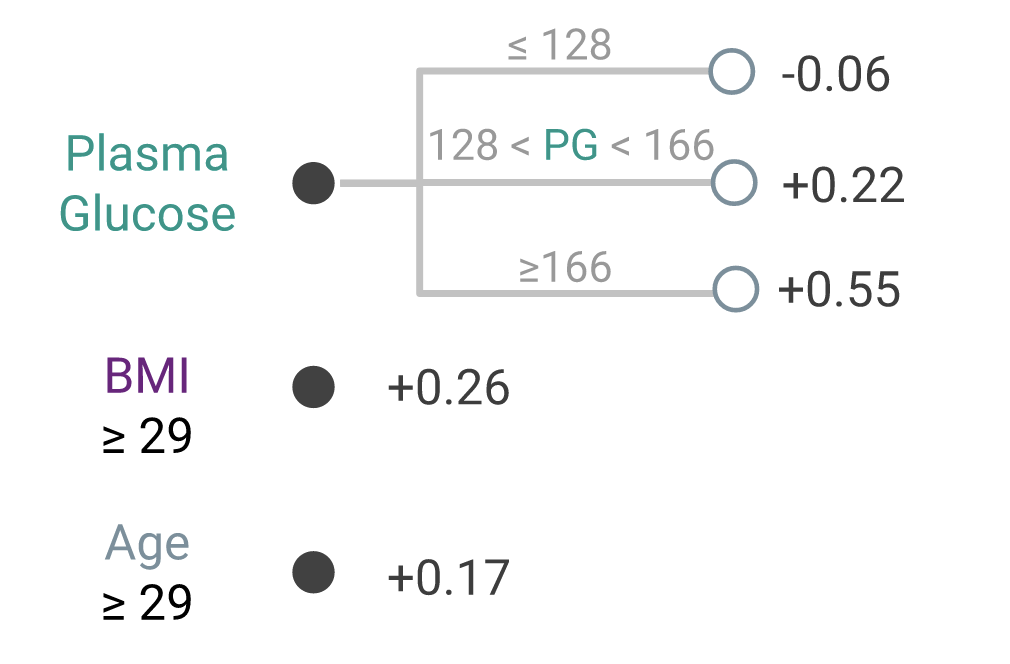

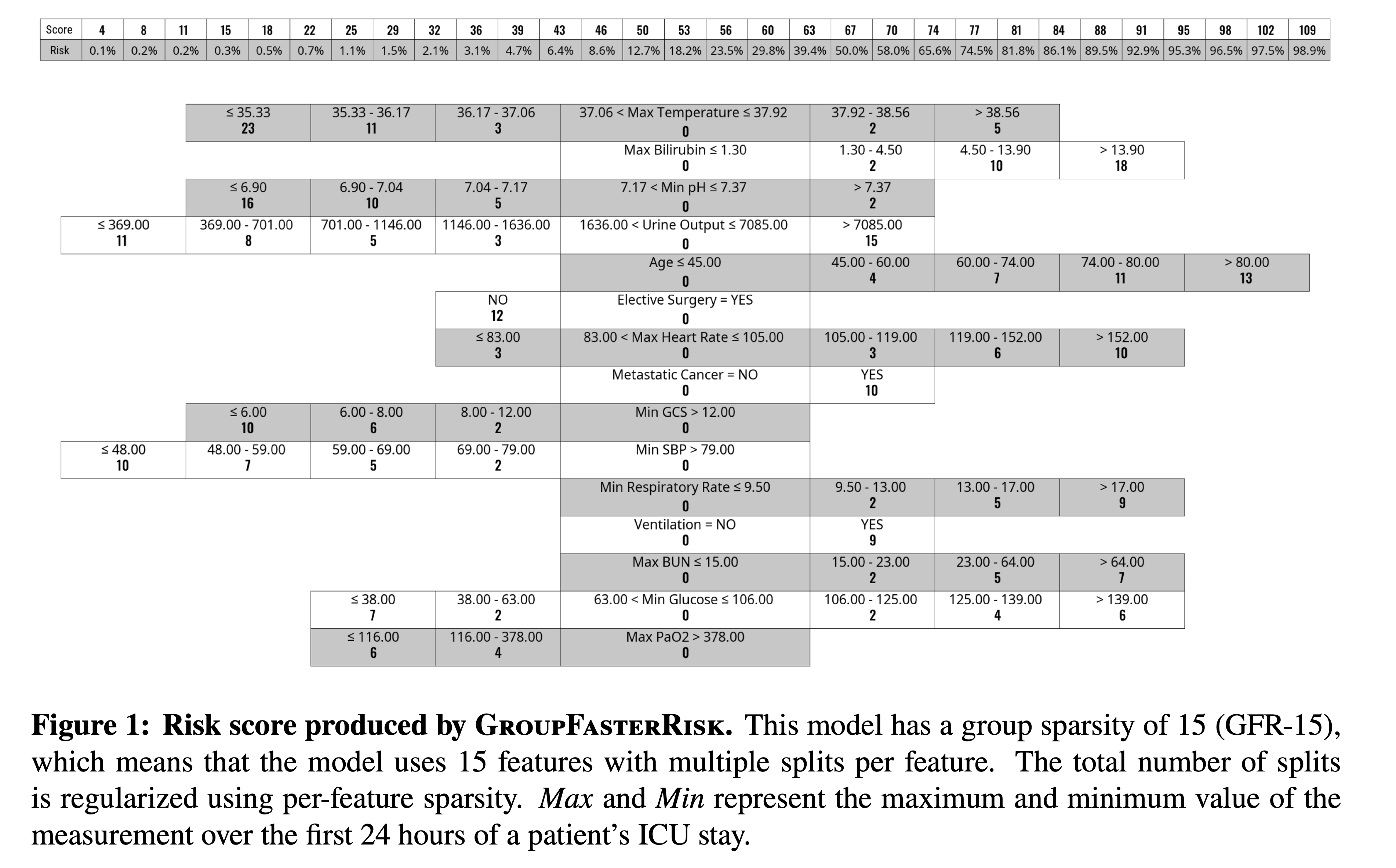

Additive Risk Score Models: FIGS

Fast Interpretable Greedy Tree Sums (FIGS): website, paper. Train a collection of decision trees, where the prediction is the sum of the predictions from each tree.

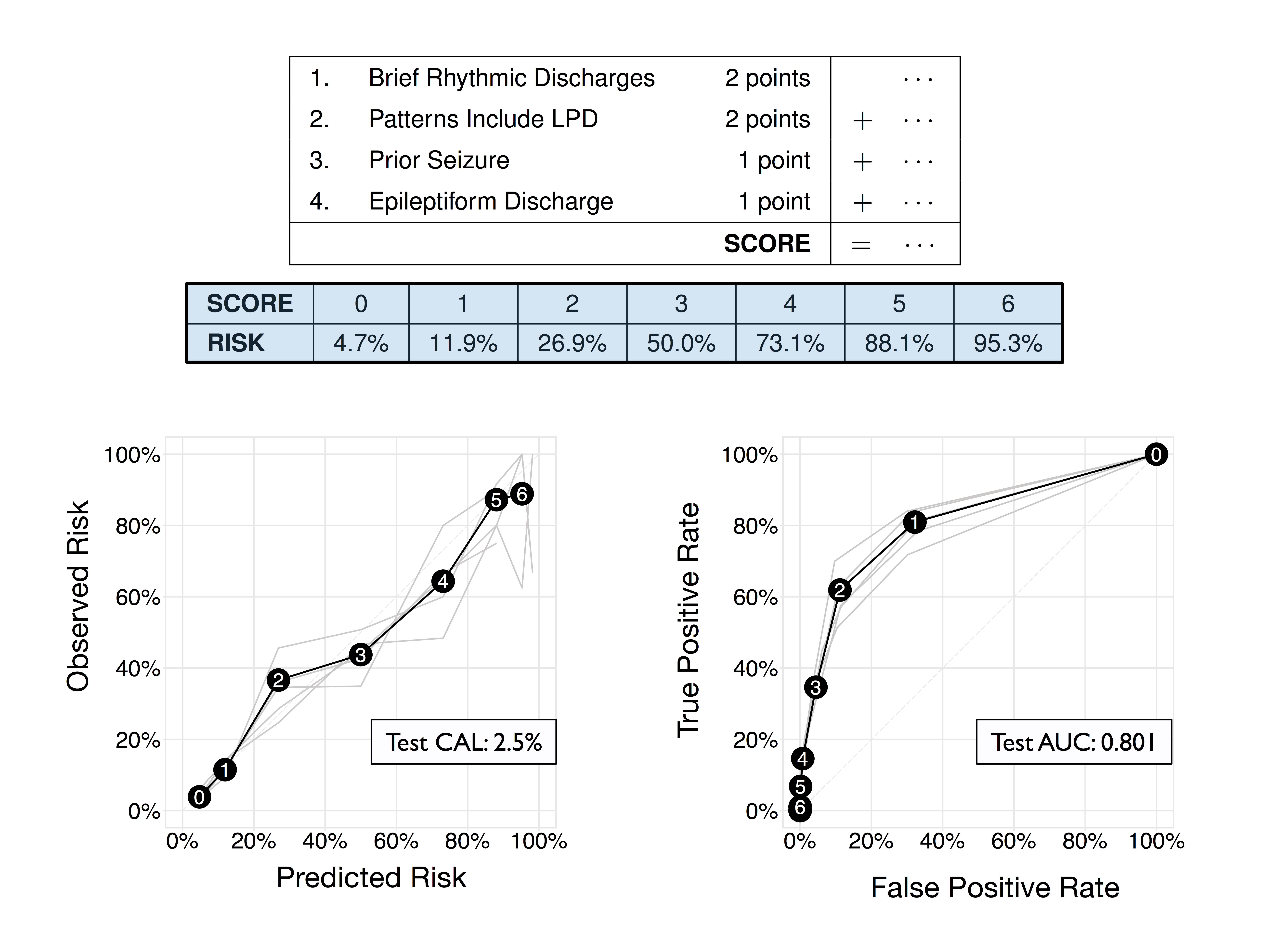

Additive Risk Score Models: Risk Scorecards

ustunb/risk-slim: simple customizable risk scores in python

Fast and Interpretable Mortality Risk Scores for Critical Care Patients

Going Further

- Interpretable Machine Learning

- Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges

- Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead | Nature Machine Intelligence Dissecting scientific explanation in AI (sXAI): A case for medicine and healthcare - ScienceDirect